Evolution in artificial life

1996/03/01 Etxeberria, Arantza | Ibañez, Jesus Iturria: Elhuyar aldizkaria

When explaining what living things are, textbooks offer lists of their characteristics in the absence of a better definition (Table 1). Furthermore, life is not a homogeneous phenomenon, but one of the most important characteristics of life is its abundance. Currently, living species on earth are approximately 5 million (vertebrates are only 50,000). Despite the fascinating fascination of life, so far the most complex artificial system developed by science or engineering has not been able to match the living. Or it seemed.

- Life is a pattern that occurs in space time.

- Life needs reproduction (the living being creates himself or is the result of the reproductive process).

- It contains the information necessary for the living being to be believed.

- Living beings obtain from the matter and energy of the environment internal matter and internal energy through metabolism.

- Living beings interact functionally with the environment.

- Living things depend heavily on their parts.

- Living beings are stable to external action.

- Life evolves.

In 1987 a congress with a curious title was held in Los Alamos (USA). When studying life and its characteristics, a new field emerged: Artificial Life (BA). Until now, biology has studied the life we know and the role of BA is to investigate the possible life. Thus, while biology studies the contingent characteristics that have appeared in the history of Earth's evolution (i.e. those that are not essential and could have been otherwise), BA aims to form the universal science of life.

This task has two objectives: on the one hand, to analyze the consequences that new computer systems can have in life sciences and, on the other, to devise new treatments for many problems that until now had no clear results, considering living beings and their characteristics as a source of inspiration. Three types of artificial systems have been developed: in software systems are programmed that present the characteristics of living beings, in hardware robots are built or computer chips are designed through evolution, and in wetware artificial components formed by molecules (for example, the synthesis of new RNA molecules in the test tube) are created.

-3800 million years

- 2000 million years

- 700 million years

- 400 million years

- 7.5 - 5 million years

Life Eukaryotic Cells (Sexual Reproduction)

Pluricellular Organisms

Vertebrate Animals Presence

of Hominids

In recent years there have been many researchers who have approached the BA to create artificial life, strengthen the foundations of theoretical biology, find more suitable models to explain complex systems, use natural strategies for the development of computer systems, develop machines or robots that behave properly in unknown environments (for example, another planet) and, finally, expand the possibility of presenting philosophical problems in more sophisticated areas than conceptual instruments. In this way, BA would develop prostheses that would open the limits of our thinking.

The aim of this article is to present the evolution studies developed by Artificial Life. Focusing on artificial evolution, we will analyze three different scenarios. In the first, Informatics uses biological evolution to solve the problems that appear in its field. The second presents the reverse situation, that is, Biology uses computer tools to simulate the evolutionary processes that occur in nature. And in the last example it is not very clear where is the border between Informatics and Biology.

Genetic algorithms

The nature of the Earth is a consequence of an evolutionary process and it has been a long time for the forms we know today to appear (Table 2). Before considering evolution, no time scale was needed for millions of years: XVIII. Until the nineteenth century, the age of the Earth was calculated from Adam and adding the durations of biblical profiles, so it was considered much younger (according to these studies, a. C.). On 23 October 4004 the creation ended at 9 am). Some current researchers have shown a similar attitude, as if BA was a task from today to tomorrow. But given the time that evolution has needed, that is a naive mentality.

Although the idea of evolution is prior to Darwin, he proposed the most accepted mechanism to explain evolution: natural selection. Darwin was a naturalist expert who spent five years exploring nature and different species around the world. However, the evolutionary mechanism was based on the observation of the techniques used for the cultivation of pigeons or cattle in rural houses. Breeders play with small changes to get a suitable mixture that generates a certain characteristic. In nature the process would be similar, but, except the conscious, the blind and disoriented force commands and chooses the most appropriate changes.

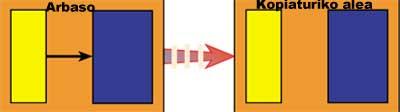

In BA this darwindar process has been used to produce an artificial evolution based on three principles: inheritance, variation and selection (Figure 1). In the artificial models, as in the artificial cases that suggested natural selection to Darwin, in the Genetic Algorithms the researcher decides which objective is directed.

The Genetic Algorithm is useful to solve a problem whenever the solution space is known and limited and it is viable to provide an objective function that allows to measure the suitability of each solution. It is very normal that the solution of the problem is an algorithm encoded by program, automaton, neuronal network or expert system.

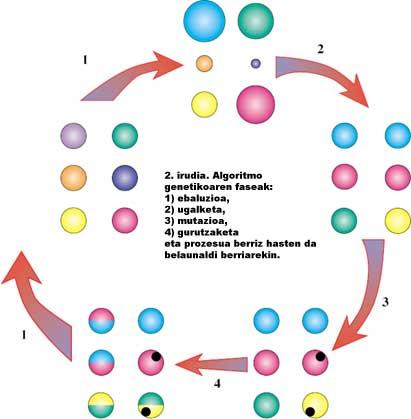

Our intention is to find the best possible solution. For the application of the principles of evolution, we first generate a set of random solutions that constitute the initial generation (most of which may be inadequate). To derive the following steps from each generation (Figure 2):

- Evaluation: the suitability function is applied to each individual of the population.

- Reproduction: The results of the evaluation indicate that individuals are likely to remain in the next generation.

- Mutation: selected people undergo random local changes.

- Crossing: several sections are exchanged for pairs.

This method will continue until you find the optimal solution or reach the maximum number of generations. The results obtained are getting better (to what extent we can choose). In addition, to use the genetic algorithm we do not need knowledge about the specific problem. In fact, for many problems that have great difficulties in developing effective algorithms, genetic algorithms guarantee very good solutions, making the BA industry one of the main applications.

Artificial worlds

One of the best known examples of BA is the artificial world, that is, everyone inside (living and inanimate beings, structured space, different times, etc.) Simulated environments they collect. In these worlds artificial organisms will develop their whole life: birth, learning, feeding, fleeing predators... It is often possible to see live the behavior and evolution of organisms on the computer screen. Aware of these resources, researchers have used artificial worlds to investigate different aspects of evolution: the relationship between evolution and learning, sexual selection, species dynamics, the relationship between predators and prey, language creation, etc.

A good example of such works is the world invented by Werner and Dyer. The problem they wanted to investigate is how can a communication language be created? Faced with this question, they were not based on human language, but on the animal, and took as inspiration the singing of frogs to fertilize. To analyze the evolution of communication, we studied the development of congenital signs within the species.

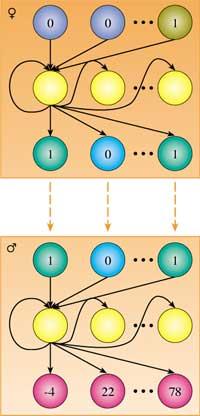

To do this they defined as residence of artificial animals a grid with pictures. Each eye of this grid can be empty or full and has the same number of females and males. The mission of these animals is to find a partner to fertilize and have offspring. Males are blind and do not see females, while females cannot move and to attract males have to give them the way through the chants. The behavior of each animal is governed by a neuronal network encoded in a genome (Figure 3). Then, by means of a genetic algorithm, evolution is allowed.

For communication, that is, for the female to acquire the male, the female must make signs that understand the males and the male must learn to interpret the signs. The relationship between the sign of the female and the movement of the male is arbitrary. The goal is to turn the population that agrees on a possible interpretation of the signs. When the males meet the female, they equate it and make two offspring: one female and one male.

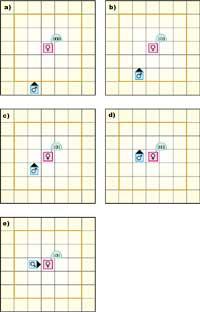

The generational evolution of this experiment was as follows: 1) the behavior of the males and females is random, 2) the males that tend to remain standing disappear, 3) although most tend to prosper, while those that tend to rotate disappear, 4) those that appear at this level know to rotate when reaching the line or column of the female, while the females that make significant signs have multiplied decreasing. The males, when they do not hear the females, advance directly and when they are close, turn, 5) use the signs to express more situations. Figure 4 shows a history after language development.

Computer ecosystems

As we have seen so far, on artificial worlds it tries to somehow reflect the physical and biological laws of nature and the computer environment is considered an abstraction of real situations. The approach of the last approach we will analyze, however, is very different: in the paradigm of computer ecosystems predominate the principles of digital information, without pretending to reproduce the real world. Therefore, the agents that appear are simply programs (usually encoded with a specific assembly language) and their functionality are computer media (memory, execution capacity, etc.) This is a management dynamic.

Scientific American

From the pages of the magazine, Dewdney proposed in 1983 the new computer game Core Wars. In the memory of a virtual computer called MARS (Martitz) two fighting programs were published that seek to destroy each other. Each program was considered an autonomous being: the programmer does not know who his rivals will be when designing his “creature” and after releasing it within the MARS, the program will have to solve it on its own.

In addition to creating many game players, to highlight that Dewdney's ideas were very fruitful in the computer field, it is worth mentioning that the first computer viruses emerged inspired by these theories. In addition, it was proven that the program can work in unknown environments as well as autonomous organisms. In addition, the possibility of developing satibological survival strategies was soon highlighted: aggressive, adaptive, reproductive and capable of resolving programs appeared.

In 1990 Rasmussen, Knuds and Feldberg proposed the Venus system. It was a question of whether the programs designed for the operation of MARS, as well as similar ones, could be generated by their own processes. By fulfilling the memory of Venus with random disproportionate commands, some of them begin to run and in that process you can observe the computer structures that are organized spontaneously.

Some significant conclusions can be cited through the application of dynamic system techniques: some orders show a tendency to work together, form simple functional units and appear invariant behaviors and dynamic attractors. The authors of the system encouraged the creation of programs that could be considered autonomous, but in some experiments the initial distribution was avoided, but they did not achieve their objective.

To read more: Emmeche, C. (1994) The garden in the machine. The emerging science of Artificial Life, Princeton (NJ), Princeton University Press. |

Venus

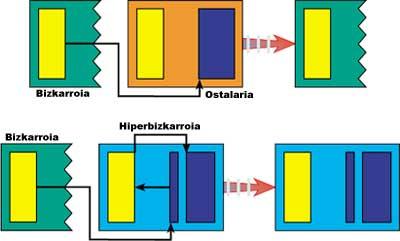

The results were somewhat lower than expected. This demonstrated that survival strategies that can be developed in computer ecosystems are not easy to create from the initial conditions. Aware of his mistaken conviction, researcher Tom Ray used a new approach to the design of the Earth system, which, far from trying to obtain “organisms” from the primitive broth, departed from the Arbaso program designed by him. Although this seems like a setback in the Mars path, its new features stand out:

- Arbaso is able to double over and over again (Figure 5), so the relevant phenomena occurring on Earth occur at the population level.

- Direct interaction between programs is prohibited, since each program has a write protection: another program can read and execute its code, but without capacity for transformation; being in principle invulnerable, a reaper mechanism is introduced to end older or appropriate programs.

- There are several options to cause mutations: On Earth any bit can change randomly (“cosmic rays”), copying mechanisms fail from time to time (“genetic errors”) and command behavior may fail (“functional errors”).

Earth, which has mechanisms to make inheritance, selection and change a reality, is a good scenario for evolution. In reality, the complexity that occurs in this ecosystem gives rise to a very important leap and through evolution rich sasibolological behaviors occur. The cyclic phenomena of immunity parasitation defined in Figure 6 are very typical.

Conclusion

Artificial Life can change the point of view of life and consequently show in a new way the relationships between nature and cultures. But above all, Artificial Life shows us that the analytical attitude of Western Science to the world may be inadequate to develop theories of complex phenomena. For this, when we try to decompose phenomena through our reasoning, the extracted parts are as complete as they are complex, or we lose along the way the more agile elements for explanation. However, Artificial Life takes advantage of the power of the interaction of simple things to unravel the path of what may be the new Science of Complexity.

Gai honi buruzko eduki gehiago

Elhuyarrek garatutako teknologia